Enhance value through outcomes-based monitoring and evaluation

08 Mar 2021

Governments and auditor-generals across Australia are increasingly emphasising the need for public sector agencies to demonstrate outcomes and value from their investments[1]. With increased competition for government funding across different portfolios and initiatives, this becomes even more critical for securing funding. Meanwhile, there is growing community expectation for greater transparency over how public money is spent and the benefits delivered from investment. Yet government departments still often struggle to get traction and buy-in for effective outcomes-based monitoring and evaluation, and there can be a tendency to regard it as a box-ticking exercise to meet compliance requirements. But we believe this is a missed opportunity.

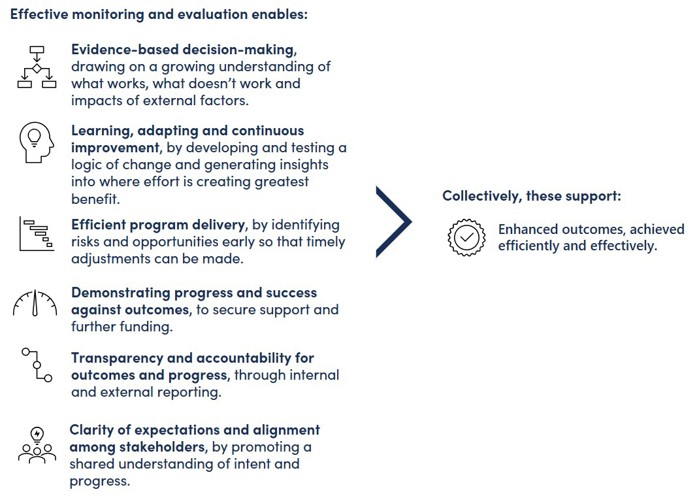

At a foundational level, monitoring and evaluation provides a structured approach to measuring progress, evaluating success and adaptively managing delivery of an initiative or investment. However, the strategic value of monitoring and evaluation extends well beyond this. When done well, outcomes-based monitoring and evaluation can enhance overall value by supporting greater efficiency and effectiveness in program delivery and achievement of desired outcomes. Through our work assisting government clients with planning and implementing monitoring and evaluation, we have observed six key benefits of outcomes-based monitoring and evaluation that can collectively help enhance the value of your initiative or investment (Figure 1). Approaching monitoring and evaluation with these multiple benefits in mind is important for getting more value from the process.

Principles for enhancing value through outcomes-based monitoring and evaluation

To help realise the benefits of outcomes-based monitoring and evaluation, we have identified five principles to guide planning and implementation of monitoring and evaluation activities. These principles reflect our experience designing outcomes-based monitoring and evaluation frameworks and undertaking evaluations for government clients across Australia. The five principles, described below, are:

- Start planning early

- Focus on outcomes, not outputs

- Measure what matters and keep it practical

- Integrate throughout planning and delivery

- Build monitoring and evaluation capability, ownership and governance across your team or organisation.

Start planning early

Start planning for monitoring and evaluation early to get the most from the process. Ideally, it should be done alongside or soon after strategic planning process.

Monitoring and evaluation requires deliberate consideration of expected cause and effect by developing a program logic (also known as a theory of change, change logic or outcome logic). Program logic encourages you to identify the desired outcomes first, before considering the most appropriate, efficient and effective suite of activities or actions required to achieve those outcomes. This process provides an organising framework for both the strategy and the monitoring and evaluation activities.

Embedding this discipline in your planning processes from the outset will bring rigour to your strategy, and enable you to establish your monitoring and evaluation processes sooner so that you can benefit from early insights.

Focus on outcomes, not outputs

Measuring outputs can be useful, but it does not reveal much about the value of the initiative. Outputs are widgets. Outcomes reflect the desired change or impact being sought through the initiative; and this is what the community and other stakeholders care most about. Monitoring and evaluation must focus on outcomes if you want to understand and communicate the real value of your work.

Focussing on outcomes has other benefits, too. It allows flexibility to identify the most appropriate suite of actions and outputs, and to adjust these over time if needed to ensure or enhance success. It creates space for finding alternative, and potentially innovative, ways to achieve outcomes more effectively and efficiently. It also allows those delivering the initiative more autonomy in planning and refining their activities to achieve outcomes, giving them greater ownership of the results.

Measure what matters and keep it practical

Develop a clear and practical outcomes-based monitoring and evaluation framework to avoid the risk of spending significant effort gathering data and information that may not be necessary or even useful. The framework should align monitoring activities with the outcomes set out in the program logic, and focus on the minimum information required to track progress against outcomes. Consider multiple lines of evidence across the monitoring and evaluation processes to help manage any potential data challenges and allow triangulation of information. Draw on existing monitoring or other data collection processes where possible, but don’t be tied to something that isn’t right for your initiative; because the wrong data, even if it is collected efficiently, won’t provide value.

Integrate throughout planning and delivery

Individual monitoring and evaluation activities can also provide specific benefits at different stages of your policy or planning cycle (see Figure 2, below). This can be achieved by integrating relevant aspects of monitoring and evaluation within plan-do-review processes across your organisation, including strategy development, program and project planning, and internal and external reporting. This will enable you to enjoy the more immediate benefits of monitoring and evaluation at each stage, as well as the overall benefits of enhanced outcomes and performance.

Figure 2 Summary of benefits of monitoring and evaluation at each stage in the plan-do-review cycle

Build monitoring and evaluation capability, ownership and governance across your team or organisation

When outcomes-based monitoring and evaluation is undertaken effectively across your initiative and organisation, almost everyone will have an interest or role in it. Some will have a direct role in undertaking monitoring and evaluation activities, while many more will use the insights gained through these activities to inform continuous improvement. However, staff and others may need some support to get the most benefit from this.

Start by proactively developing awareness of the importance of outcomes-based monitoring and evaluation, including the multiple ways in which it can help your organisation to perform better. Define clear responsibilities and accountabilities for outcomes, monitoring and evaluation activities, and for using findings. Ensure ownership by assigning responsibilities and accountabilities to relevant staff at each level of your organisation in alignment with the existing governance structure. Finally, strategically invest in building capability to fulfill monitoring and evaluation responsibilities and accountabilities, including what to collect and when, and how to use insights.

Don’t let the challenges become barriers to enhancing value

Planning for and undertaking outcomes-based monitoring and evaluation can be challenging. It may require input from multiple internal and external stakeholders, alignment with complex outcomes, navigation of a vast array of data or data gaps, accounting for external factors and other unknowns, and identifying progress against outcomes that may take many years to manifest. It is important to recognise these challenges, without letting them hold you back.

While the best time to start planning for outcomes-based monitoring and evaluation was alongside strategic planning processes, the second-best time is now. Start by reflecting on how you can use monitoring and evaluation to enhance value for your initiative, organisation and stakeholders, then apply these principles to help you enjoy the full benefits that outcomes-based monitoring and evaluation can bring.

[1] For example, the Victorian Government’s Outcomes Reform and Evidence Reform, the Australian National Audit Office (ANAO) recent audit insights on Performance Measurement and Monitoring, and the Australia and New Zealand School of Government (ANZSOG) recent research paper for the Australian Public Service Review.

Ricardo Sustainability, Clean Energy and Environment

Ricardo Sustainability, Clean Energy and Environment